DataOps Explained: How To Not Screw It Up

Table of Contents

Data teams face the same scaling challenges that software teams solved a decade ago. The volume grows exponentially. The business demands faster delivery. Quality can’t slip. DataOps emerged as the answer, bringing DevOps principles to data engineering and data science teams who need to support their organization’s expanding data needs.

The approach mirrors what DevOps did for software development. Continuous integration and continuous deployment become standard practice for data pipelines. Automation replaces manual processes throughout the stack. Data engineers deliver reliable data to analysts and downstream stakeholders without the traditional bottlenecks and delays. Everything moves faster while quality actually improves.

The biggest data companies built this discipline out of necessity. Netflix, Uber, and Airbnb couldn’t scale their data operations using traditional methods. They adopted CI/CD principles from software engineering and created open source tools that let data teams work like engineering teams. Their success proved that data pipelines could be as reliable and automated as application deployments.

Over the past few years, DataOps has grown in popularity among data teams of all sizes as a framework that enables quick deployment of data pipelines while still delivering reliable and trustworthy data that is readily available.

In fact, if you’re a data engineer, you’re probably already applying DataOps processes and technologies to your stack, whether or not you realize it.

DataOps can benefit any organization, which is why we put together a guide to help clear up any misconceptions you might have around the topic. In this guide, we’ll cover:

- What is DataOps?

- DataOps vs. DevOps

- The DataOps framework

- Core principles of DataOps

- Five best practices for DataOps

- Top 6 benefits of DataOps

- Popular DataOps tools and software

- Implementing DataOps at your company

What is DataOps?

DataOps is a discipline that merges data engineering and data science teams to support an organization’s data needs. It takes the automation, continuous delivery, and collaboration that transformed software development and brings those same practices to data pipelines. The result is data operations that move at the speed of business.

The methodology fundamentally changes how data teams work. Engineers, data scientists, IT operations, analysts, and business stakeholders stop working in isolation and start operating as a unified team. Everyone shares visibility into the complete data lifecycle, from initial ingestion through transformation to final analytics. This isn’t just better communication. It’s shared ownership of outcomes.

Speed and quality aren’t opposing forces in DataOps. Automation eliminates the manual steps that slow everything down and introduce errors. Continuous monitoring catches issues immediately instead of after they’ve corrupted downstream reports. Changes that once required months of planning deploy safely in days through proven CI/CD practices.

Traditional data management creates natural bottlenecks. Engineering builds what they think the business wants. Analytics discovers problems only after reports fail. Business users wait months for simple metric changes while competitors move ahead. DataOps breaks down these silos by making the entire pipeline transparent and giving every stakeholder a voice in the process.

The goal is straightforward yet transformative. Deliver reliable, high-quality data to decision makers exactly when they need it. This means no more quarterly release cycles for urgent requests, no more data quality surprises during board presentations, and no more blame games when pipelines break. DataOps creates a smooth, predictable flow from raw data to actionable insights, with everyone aligned on making that happen.

DataOps vs. DevOps

While DataOps draws many parallels from DevOps, there are important distinctions between the two.

The key difference is DevOps is a methodology that brings development and operations teams together to make software development and delivery more efficient, while DataOps focuses on breaking down silos between data producers and data consumers to make data more reliable and valuable.

For years, DevOps teams have become integral to most engineering organizations, removing silos between software developers and IT as they facilitate the seamless and reliable release of software to production. DevOps rose in popularity among organizations as they began to grow and the tech stacks that powered them began to increase in complexity.

To keep a constant pulse on the overall health of their systems, DevOps engineers leverage observability to monitor, track, and triage incidents to prevent application downtime.

Software observability consists of three pillars:

- Logs: A record of an event that occurred at a given timestamp. Logs also provide context to that specific event that occurred.

- Metrics: A numeric representation of data measured over a period of time.

- Traces: Represent events that are related to one another in a distributed environment.

Together, the three pillars of observability give DevOps teams the ability to predict future behavior and trust their applications.

Similarly, the discipline of DataOps helps teams remove silos and work more efficiently to deliver high-quality data products across the organization.

DataOps professionals also leverage observability to decrease downtime as companies begint o ingest large amounts of data from various sources.

Data observability is an organization’s ability to fully understand the health of the data in their systems. It reduces the frequency and impact of data downtime (periods of time when your data is partial, erroneous, missing or otherwise inaccurate) by monitoring and alerting teams to incidents that may otherwise go undetected for days, weeks, or even months.

Like software observability, data observability includes its own set of pillars:

- Freshness: Is the data recent? When was it last updated?

- Distribution: Is the data within accepted ranges? Is it in the expected format?

- Volume: Has all the data arrived? Was any of the data duplicated or removed from tables?

- Schema: What’s the schema, and has it changed? Were the changes to the schema made intentionally?

- Lineage: Which upstream and downstream dependencies are connected to a given data asset? Who relies on that data for decision-making, and what tables is that data in?

By gaining insight into the state of data across these pillars, DataOps teams can understand and proactively address the quality and reliability of data at every stage of its lifecycle.

The DataOps framework

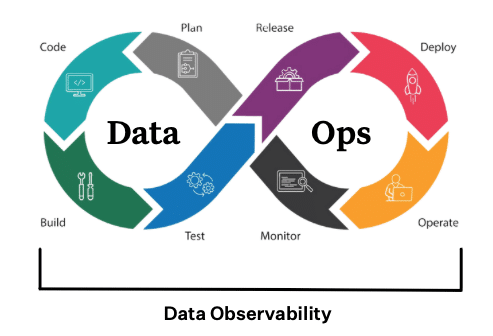

To facilitate faster and more reliable insight from data, DataOps teams apply a continuous feedback loop, also referred to as the DataOps lifecycle.

The DataOps lifecycle takes inspiration from the DevOps lifecycle, but incorporates different technologies and processes given the ever-changing nature of data.

The DataOps lifecycle allows both data teams and business stakeholders to work together in tandem to deliver more reliable data and analytics to the organization.

Here is what the DataOps lifecycle looks like in practice:

- Planning: Partnering with product, engineering, and business teams to set KPIs, SLAs, and SLIs for the quality and availability of data (more on this in the next section).

- Development: Building the data products and machine learning models that will power your data application.

- Integration: Integrating the code and/or data product within your existing tech and or data stack. (For example, you might integrate a dbt model with Airflow so the dbt module can automatically run.)

- Testing: Testing your data to make sure it matches business logic and meets basic operational thresholds (such as uniqueness of your data or no null values).

- Release: Releasing your data into a test environment.

- Deployment: Merging your data into production.

- Operate: Running your data into applications such as Looker or Tableau dashboards and data loaders that feed machine learning models.

- Monitor: Continuously monitoring and alerting for any anomalies in the data.

This cycle will repeat itself over and over again. However, by applying similar principles of DevOps to data pipelines, data teams can better collaborate to identify, resolve, and even prevent data quality issues from occurring in the first place.

Core principles of DataOps

DataOps solves the problems that plague most data teams. Siloed departments, slow releases, and questionable data quality aren’t inevitable. They’re symptoms of outdated processes. These principles show you how to fix them.

Collaboration across teams

Data work fails when teams operate in isolation. Engineering builds pipelines without understanding analyst needs. Analysts create reports without knowing system limitations. Business users lose trust when nobody explains what went wrong.

DataOps puts everyone in the same room. Engineers, analysts, and business stakeholders work together from the start. They share sprint reviews, daily standups, and Slack channels. When marketing needs a new dashboard, they help define requirements alongside the engineers who’ll build it.

This isn’t about more meetings. It’s about preventing the expensive mistakes that happen when teams don’t talk. A pipeline change that breaks downstream reports. A perfectly built feature that solves the wrong problem. These failures disappear when collaboration becomes standard practice.

The payoff is immediate. Projects move faster because requirements are clear from the start. Quality improves because everyone understands the full context. Trust builds because problems get discussed openly instead of discovered later. Shared responsibility replaces finger-pointing.

Continuous delivery for data

Your data pipelines should deploy like modern software. Version control, automated testing, and one-click deployments aren’t just for application code anymore.

Think of small, frequent updates instead of quarterly releases. Every SQL transformation gets tracked in Git. Tests run automatically when code changes. New features go live in days, not months. When something breaks, you roll back immediately.

The tools already exist. Jenkins, GitHub Actions, and similar platforms handle data deployments just fine. The hard part is changing your mindset. Stop treating data releases as special events that require manual approval chains and weekend work. Start treating them as routine operations that happen multiple times per week.

This approach fundamentally changes how you deliver value. Business questions get answered while they’re still relevant. New data sources come online before opportunities expire. Mistakes get caught in small batches instead of massive failures. Speed becomes your competitive advantage.

Automation first

Manual processes don’t scale. If humans are triggering pipelines, checking data quality by eye, or copying files between systems, you’re already behind.

Modern orchestration tools like Airflow or Prefect eliminate manual scheduling. Automated tests catch data anomalies before they reach production. Self-healing workflows retry failed jobs without waking anyone up at 2 AM.

Every manual step you eliminate pays compound dividends. Fewer errors. Faster recovery. More time for actual analysis instead of operational firefighting. The investment in automation scripts and tooling pays for itself within months, sometimes weeks.

Consider what happens at scale. A team managing ten pipelines manually might survive. That same team managing a thousand pipelines will drown. Automation isn’t just about efficiency. It’s about making growth possible. You can’t hire your way out of manual processes when data volumes double every year.

The real win comes from consistency. Automated processes run the same way every time. They don’t skip steps when rushed. They don’t make typos when tired. They don’t take vacations. This reliability becomes the foundation everything else builds on.

Quality at the source

Bad data compounds as it moves through your pipeline. A small error in raw data becomes a major incident when it reaches executive dashboards. That’s why quality checks belong at the beginning, not the end.

Build validation into your ingestion process. Check schemas, ranges, and business rules the moment data arrives. Use tools like Great Expectations or custom SQL tests to enforce standards automatically. When something fails validation, stop the pipeline and alert the team.

This approach costs less than fixing problems downstream. It’s the difference between catching a typo during editing versus issuing a correction after publication. Early detection prevents cascading failures and maintains trust in your data products.

The implementation is straightforward. Define your quality rules as code. Run them automatically on every data load. Track violations over time. Most importantly, make quality everyone’s responsibility, not just a QA team’s problem. When quality becomes part of the development process, it stops being an afterthought.

Observability as a pillar

You can’t fix what you can’t see. Data pipelines break in creative ways, and hoping users will report problems isn’t a strategy.

Track everything that matters. Data freshness, record counts, schema changes, and statistical distributions. Modern observability tools use machine learning to detect anomalies automatically. They’ll tell you when yesterday’s sales data looks suspiciously low or when a source system adds an unexpected column.

Good data observability means knowing about problems before your users do. An automated alert at 6 AM beats an angry email from the CEO at 9 AM. It transforms your team from reactive firefighters into proactive operators who prevent incidents before they impact the business.

But observability goes beyond just alerting. It provides the context you need to debug quickly. When a pipeline fails, you need to know what changed. Was it the code? The data volume? A schema modification upstream? Complete observability gives you that full picture instantly, turning hour-long investigations into five-minute fixes.

Think of it as your data’s vital signs. Just like doctors monitor heart rate and blood pressure, you monitor data flow rates and quality metrics. These indicators tell you when something’s wrong before it becomes critical. They also tell you when things are healthy, giving you confidence to make changes and improvements without fear.

Governance baked in

Security and compliance aren’t optional additions you bolt on later. They’re core requirements that belong in your pipeline architecture from day one.

Automate access controls so only authorized users see sensitive data. Mask personally identifiable information before it spreads through your systems. Track data lineage so you know exactly where information flows and how it transforms.

This isn’t about slowing down for bureaucracy. It’s about building guardrails that let you move fast safely. Automated compliance checks run continuously. Privacy rules enforce themselves. Audit logs are generated automatically. When governance is built into the pipeline, it enables speed rather than blocking it.

The alternative is painful. Retrofitting governance into existing pipelines takes months and breaks things. Compliance violations lead to fines and damaged reputation. Security breaches destroy customer trust. It’s far cheaper to build these protections from the start than to add them under pressure after an incident.

Metrics-driven improvement

Measure your data operations like you measure your data. Track pipeline success rates, incident resolution times, and data quality scores. These numbers tell you whether you’re actually improving or just staying busy.

Start with basics. How long does it take to detect and fix a broken pipeline? How many data errors reach production each week? What percentage of pipeline runs succeed on the first try? These metrics become your baseline for improvement.

Use the data to drive decisions. If the mean time to resolution is six hours, focus on better alerting. If data downtime exceeds ten hours weekly, invest in redundancy. The numbers don’t lie, and they’ll show you exactly where to focus your efforts.

Share these metrics widely. When the entire organization sees data operations performance, it creates accountability and recognition. Celebrate improvements. Learn from failures. Most importantly, use trends to predict problems before they happen. Rising error rates signal trouble ahead. Increasing resolution times indicate growing complexity. The metrics tell you what’s coming.

Five best practices for DataOps

Similar to our friends in software development, data teams are beginning to follow suit by treating data like a product. Data is a crucial part of an organization’s decision-making process, and applying a product management mindset to how you build, monitor, and measure data products helps ensure those decisions are based on accurate, reliable insights.

After speaking with hundreds of customers over the last few years, we’ve boiled down five key DataOps best practices that can help you better adopt this “data like a product” approach.

1. Gain stakeholder alignment on KPIs early, and revisit them periodically.

Since you are treating data like a product, internal stakeholders are your customers. As a result, it’s critical to align early with key data stakeholders and agree on who uses data, how they use it, and for what purposes. It is also essential to develop Service Level Agreements (SLAs) for key datasets. Agreeing on what good data quality looks like with stakeholders helps you avoid spinning cycles on KPIs or measurements that don’t matter.

After you and your stakeholders align, you should periodically check in with them to ensure priorities are still the same. Brandon Beidel, a Senior Data Scientist at Red Ventures, meets with every business team at his company weekly to discuss his teams’ progress on SLAs.

“I would always frame the conversation in simple business terms and focus on the ‘who, what, when, where, and why’,” Brandon told us. “I’d especially ask questions probing the constraints on data freshness, which I’ve found to be particularly important to business stakeholders.”

2. Automate as many tasks as possible.

One of the primary focuses of DataOps is data engineering automation. Data teams can automate rote tasks that typically take hours to complete, such as unit testing, hard coding ingestion pipelines, and workflow orchestration.

By using automated solutions, your team reduces the likelihood of human errors entering data pipelines and improves reliability while aiding organizations in making better and faster data-driven decisions.

3. Embrace a “ship and iterate” culture.

Speed is of the essence for most data-driven organizations. And, chances are, your data product doesn’t need to be 100 percent perfect to add value. My suggestion? Build a basic MVP, test it out, evaluate your learnings, and revise as necessary.

My firsthand experience has shown that successful data products can be built faster by testing and iterating in production, with live data. Teams can collaborate with relevant stakeholders to monitor, test, and analyze patterns to address any issues and improve outcomes. If you do this regularly, you’ll have fewer errors and decrease the likelihood of bugs entering your data pipelines.

4. Invest in self-service tooling.

A key benefit to DataOps is removing the silos that data sits in between business stakeholders and data engineers. And in order to do this, business users need to have the ability to self-serve their own data needs.

Rather than data teams fulfilling ad hoc requests from business users (which ultimately slows down decision-making), business stakeholders can access the data they need when they need it. Mammad Zadeh, the former VP of Engineering for Intuit, believes that self-service tooling plays a crucial role in enabling DataOps across an organization.

“Central data teams should make sure the right self-serve infrastructure and tooling are available to both producers and consumers of data so that they can do their jobs easily,” Mammad told us. “Equip them with the right tools, let them interact directly, and get out of the way.”

5. Prioritize data quality, then scale.

Maintaining high data quality while scaling is not an easy task. So start with your most important data assets—the information your stakeholders rely on to make important decisions.

If inaccurate data in a given asset could mean lost time, resources, and revenue, pay attention to that data and the pipelines that fuel those decisions with data quality capabilities like testing, monitoring, and alerting. Then, continue to build out your capabilities to cover more of the data lifecycle. (And going back to best practice #2, keep in mind that data monitoring at scale will usually involve automation.)

Top 6 benefits of DataOps

While DataOps exists to eliminate data silos and help data teams collaborate, teams can realize four other key benefits when implementing DataOps.

1. Better data quality

Companies can apply DataOps across their pipelines to improve data quality. This includes automating routine tasks like testing and introducing end-to-end observability with monitoring and alerting across every layer of the data stack, from ingestion to storage to transformation to BI tools.

This combination of automation and observability reduces opportunities for human error and empowers data teams to proactively respond to data downtime incidents quickly—often before stakeholders are aware anything’s gone wrong.

With these DataOps practices in place, business stakeholders gain access to better data quality, experience fewer data issues, and build up trust in data-driven decision-making across the organization.

2. Happier and more productive data teams

On average, data engineers and scientists spend at least 30% of their time firefighting data quality issues, and a key part of DataOps is creating an automated and repeatable process, which in return frees up engineering time.

Automating tedious engineering tasks such as continuous code quality checks and anomaly detection can improve engineering processes while reducing the amount of technical debt inside an organization.

DataOps leads to happier team members who can focus their valuable time on improving data products, building out new features, and optimizing data pipelines to accelerate the time to value for an organization’s data.

3. Faster access to analytic insights

DataOps automates engineering tasks such as testing and anomaly detection that typically take countless hours to perform. As a result, DataOps brings speed to data teams, fostering faster collaboration between data engineering and data science teams.

Shorter development cycles for data products reduce costs (in terms of engineering time) and allow data-driven organizations to reach their goals faster. This is possible since multiple teams can work side-by-side on the same project to deliver results simultaneously.

In my experience, the collaboration that DataOps fosters between different teams leads to faster insight, more accurate analysis, improved decision-making, and higher profitability. If DataOps is adequately implemented, teams can access data in real-time and adjust their decision-making instead of waiting for the data to be available or requesting ad-hoc support.

4. Greater agility

Markets change fast. Customer preferences shift overnight. Competitors launch new features weekly. Your data infrastructure needs to keep pace.

DataOps makes your data operation as agile as your business needs to be. New data sources integrate in days. Pipeline modifications deploy immediately. When the business pivots, the data team pivots with them.

Think about what this means practically. When a global event disrupts your industry, you’re tracking impact metrics within days, not months. When a new competitor emerges, you’re analyzing their strategy while it’s still relevant. When customers demand new features, you’re measuring adoption before the quarter ends.

This agility becomes a competitive advantage. The company that understands changes first responds first. The organization that tests and learns fastest wins. DataOps makes you that company.

5. Transparent and trustworthy data ecosystem

Trust is the foundation of data-driven culture. Without it, every number gets questioned. Every decision requires verification. Progress slows to a crawl.

DataOps builds trust systematically. Data lineage shows exactly where numbers come from. Quality metrics prove data meets standards. When issues occur, they’re communicated immediately with clear resolution timelines. Transparency replaces uncertainty.

The cultural shift is profound. Executives stop asking “is this data right?” and start asking “what does the data tell us?” Teams stop building redundant reports because they don’t trust the official ones. Usage of data platforms increases significantly because people actually believe what they see.

This trust translates directly to business value. Faster decisions. Less duplicated effort. More innovation. When everyone trusts the data, they use it. When they use it, they make better choices. The entire organization levels up.

6. Reduce operational and legal risk

As organizations strive to increase the value of data by democratizing access, it’s inevitable that ethical, technical, and legal challenges will also rise. Government regulations such as General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) have already changed the ways companies handle data, and introduce complexity just as companies are striving to get data directly in the hands of more teams.

DataOps—specifically data observability—can help address these concerns by providing more visibility and transparency into what users are doing with data, which tables data feeds into, and who has access to data either up or downstream.

Popular DataOps tools and software

Because DataOps isn’t a subcategory of the data platform but a framework for how to manage and scale it more efficiently, the best DataOps tools to implement for your stack will likely leverage automation and other self-serve features that optimize impact against data engineering resource.

The name of the game here is scale. If a tool is functional in the short-term but limits your ability to scale commensurate to stakeholder demand in the future, that solution likely isn’t the best solution for a functional DataOps platform.

So, what do I mean by that? Let’s take a look at a few of the critical functions of a DataOps platform and how specific DataOps tools might optimize that step in your pipeline.

Data orchestration

Accurate and reliable scheduling is critical to the success of your data pipelines. Unfortunately, as your data needs begin to grow, your ability to manage those jobs effectively won’t. Hand-coded pipelines will always be limited by the engineering resource available to manage them.

Instead of requiring infinite resource to scale your data pipelines, data orchestration organizes multiple pipeline tasks (manual or automated) into a single end-to-end process, ensuring that data flows predictably through a platform at the right time and in the right order without the need to code that pipeline manually.

Data orchestration is an essential DataOps tool for the DataOps platform because it deals directly with the lifecycle of data.

Some of the most popular solutions for data orchestration include:

Data observability

As far as DataOps tools go, data observability is about as essential as they come. Not only does it ensure that the data being leveraged into data products is accurate and reliable, but it does so in a way that automates and democratizes the process for engineers and practitioners.

It’s easy to scale, it’s repeatable, and it enables teams to solve for data trust efficiently together.

A great data observability tool for your DataOps platform should also include automated lineage, to enable your DataOps engineers to understand the health of your data at every point in the DataOps lifecycle and more efficiently root-cause data incidents as they arise.

Monte Carlo provides each of these solutions out-of-the-box, as well as at-a-glance dashboards and CI/CD integrations like GitHub to improve data quality workflows across teams.

Data ingestion

As the first (and arguably the most critical) step in your data pipeline, the right data ingestion tooling is unsurprisingly important.

And much like orchestration for your pipelines, as your data sources begin to scale, leveraging an efficient automated or semi-automated solution for data ingestion will become paramount to the success of your DataOps platform.

Batch data ingestion tools include:

- Fivetran – A leading enterprise ETL solution that manages data delivery from the data source to the destination.

- Airbyte – An open source platform that easily allows you to sync data from applications.

Data streaming ingestion solutions include:

- Confluent – A full-scale data streaming platform that enables you to easily access, store, and manage data as continuous, real-time streams, and is the vendor that supports Apache Kafka , the open source event streaming platform to handle streaming analytics and data ingestion.

- Matillion – A productivity solution that also allows teams to leverage Kafka streaming data to setup new streaming ingestion jobs faster.

Data transformation

When it comes to transformations, there’s no denying that dbt has become the de facto solution across the industry and a critical DataOps tool for your DataOps platform.

Investing in something like dbt makes creating and managing complex models faster and more reliable for expanding engineering and practitioner teams because dbt relies on modular SQL and engineering best practices to make data transforms more accessible.

Unlike manual SQL writing which is generally limited to data engineers, dbt’s modular SQL makes it possible for anyone familiar with SQL to create their own data transformation jobs.

Its open source solution is robust out-of-the-box, while its managed cloud offering provides additional opportunities for scalability across domain leaders and practitioners.

Implementing DataOps at your company

The good news about Data Ops? Companies adopting a modern data stack and other best practices are likely already applying DataOps principles to their pipelines.

For example, more companies are hiring DataOps engineers to drive the adoption of data for decision-making—but these job descriptions include duties likely already being handled by data engineers at your company. DataOps engineers are typically responsible for:

- Developing and maintaining a library of deployable, tested, and documented automation design scripts, processes, and procedures.

- Collaborating with other departments to integrate source systems with data lakes and data warehouses.

- Creating and implementing automation for testing data pipelines.

- Proactively identifying and fixing data quality issues before they affect downstream stakeholders.

- Driving the awareness of data throughout the organization, whether through investing in self-service tooling or running training programs for business stakeholders.

- Familiarity with data transformation, testing, and data observability platforms to increase data reliability.

Even if other team members are currently overseeing these functions, having a specialized role dedicated to architecting how the DataOps framework comes to life will increase accountability and streamline the process of adopting these best practices.

And no matter what job titles your team members hold, just as you can’t have DevOps without application observability, you can’t have DataOps without data observability.

Data observability tools use automated monitoring, alerting, and triaging to identify and evaluate data quality and discoverability issues. This leads to healthier pipelines, more productive teams, and happier customers.

Curious to learn more? We’re always ready to talk about DataOps and observability. Monte Carlo was recently recognized as a DataOps leader by G2 and data engineers at Clearcover, Vimeo, and Fox rely on Monte Carlo to improve data reliability across data pipelines.

Reach out to Glen Willis and book a time to speak with us in the form below.

Our promise: we will show you the product.