The Future of Data Analytics: 10 Trends to Watch Out For in 2025

Table of Contents

The future of data analytics is unfolding faster than most organizations can keep pace with. As we move through 2025, the convergence of artificial intelligence, cloud computing, and real-time processing capabilities is creating unprecedented opportunities for businesses to extract value from their data. But with these opportunities come new challenges and considerations that will fundamentally reshape how we approach analytics.

The data analytics field has transformed far from its roots in basic reporting and historical analysis. Today’s organizations face a radically different environment where data streams continuously, decisions happen in milliseconds, and the line between technical and business roles continues to blur. The future of data analytics isn’t just about processing more information faster. It’s about fundamentally rethinking how we create, share, and monetize insights across the enterprise.

What makes this moment particularly exciting is the democratization of advanced analytics capabilities. Technologies that were once the exclusive domain of tech giants are now accessible to organizations of all sizes. From real-time streaming platforms to composable architectures, from ethical AI frameworks to cross-functional collaboration tools, the building blocks for next-generation analytics are within reach.

In this article, we’ll explore ten critical trends that are shaping the future of data analytics. These aren’t distant possibilities but developments already transforming how leading organizations operate today. Whether you’re a data engineer, business analyst, or executive, knowing these trends will be essential for thriving in the rapidly changing analytics environment and positioning your organization for success in an increasingly data-driven world.

Table of Contents

1. The increasing velocity of big data analytics

The days of exporting data weekly, or monthly, then sitting down to analyze it are long gone. In the future, big data analytics will increasingly focus on data freshness with the ultimate goal of real-time analysis, enabling better-informed decisions and increased competitiveness.

Streaming data, as opposed to processing it in batches, is essential for gaining real-time insight, but has implications when it comes to maintaining data quality – fresher data can mean a higher risk of acting on inaccurate or incomplete data (which can be be addressed using the principles of data observability).

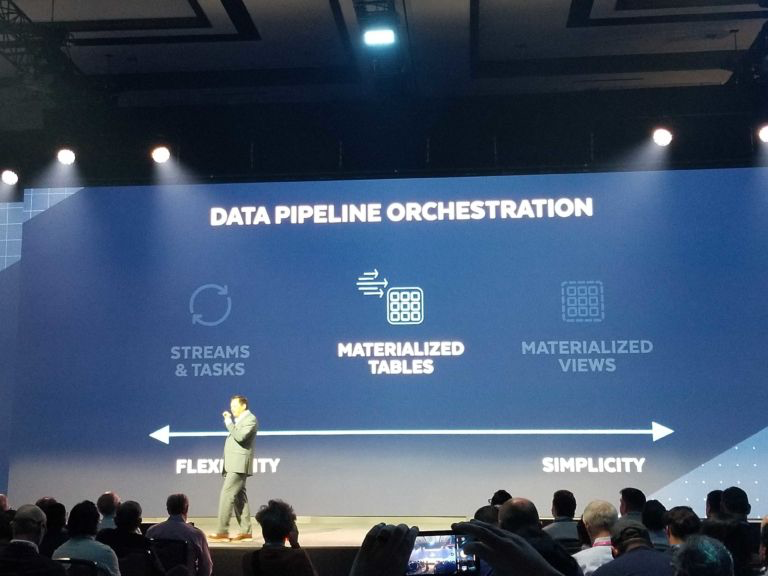

Snowflake, for example, announced Snowpipe streaming at this year’s summit. The company has refactored their Kafka connector and made it so that when data lands in Snowflake it is queryable immediately resulting in a 10x lower latency.

Google recently announced that PubSub can directly stream into BigQuery as well as the launch of Dataflow Prime, an upgraded version of their managed streaming analytics service.

On the data lake side, Databricks has launched Unity Catalog to help bring more metadata, structure, and governance to data assets.

Real-time data/insights

Being able to access real-time data for analysis might sound like overkill to some, but that’s just no longer the case. Imagine trading Bitcoin based on what it was worth last week or writing your tweets based on what was trending a month ago.

Real-time insight has already shaken up industries like finance and social media, but its implications beyond them are huge: Walmart, for example, has built what may be the world’s largest hybrid cloud to, among other things, manage their supply chains and analyze sales in real time.

Real-time, automated decision making

Machine learning (ML) and artificial intelligence (AI) are already being successfully employed in industries like healthcare, for detection and diagnosis, and manufacturing, where intelligent systems track wear and tear on parts. When a part is close to failure, the system might automatically reroute the assembly line elsewhere until it can be fixed.

That’s a practical example, but there are all sorts of applications beyond this: email marketing software that can diagnose the winner of an A/B test and apply it to other emails, for example, or analysis of customer data to determine loan eligibility. Of course, businesses that don’t yet feel comfortable fully automating decisions can always retain a final step of manual approval.

2. The heightened veracity of big data analytics

The more data we collect, the more difficult it is to ensure it’s accuracy and quality. To read more about this, check out our recent post on the future of data management, but for now, let’s get into trends surrounding the veracity of big data analytics.

Data quality

Making data-driven decisions is always a sensible business move…unless those decisions are based on bad data. And data that’s incomplete, invalid, inaccurate, or fails to take context into account is bad data. Fortunately, many data analytics tools are now capable of identifying and drawing attention to data that seems out of place.

It’s always best, of course, to diagnose a problem rather than treating the symptom. Instead of just relying on tools to identify bad data in the dashboard, businesses need to be scrutinizing their pipelines from end to end. Figuring out the right source(s) to draw data from for a given use case, how it’s analyzed, who is using it, and so on, will result in healthier data overall and should reduce issues of data downtime.

Data observability

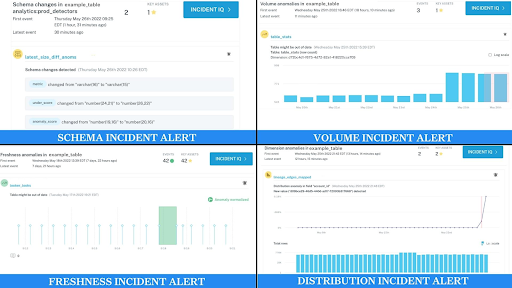

There’s more to observability than just monitoring and alerting you to broken pipelines,. An understanding of the five pillars of data observability – freshness, schema, volume, distribution, and lineage – is the first step for businesses looking to get a handle on the health of their data and improve its overall quality.

Beyond that, a data observability platform like Monte Carlo can automate monitoring, alerting, lineage, and triaging to highlight data quality and discoverability issues (and potential issues). The ultimate goal here is to eliminate bad data altogether and prevent it from recurring.

Data governance

With the volumes of data we’re talking about here, taking proper protective measures becomes even more important. Compliance with measures like the General Data Protection Regulation (GDPR) and California Consumer Privacy Act (CCPA) is vital to avoid fines, but there’s also the issue of how damaging data breaches can be to a company’s brand and reputation.

We’ve previously written about data discovery – real-time insights about data across domains, while abiding by a central set of governance standards – but it’s worth bringing up again here.

Creating and implementing a data certification program is one way to ensure that all departments within a business work only using data that conforms to appropriate and agreed upon standards. Beyond that, data catalogs can be used to outline how stakeholders can (and can’t) use data.

3. Storage and analytics platforms are handling larger volumes of data

By using cloud technology, things like storage availability and processing power can be virtually infinite. Businesses no longer need to worry about buying physical storage or extra machines, because they can use the cloud to scale to whatever level they need at that moment.

Beyond that, cloud data processing means that multiple stakeholders can access the same data at the same time without experiencing slowdown or roadblocks. It also means that, as long as the right security measures are in place, up to the minute data can be accessed at any time and from anywhere.

The current status quo for this is data warehousing, with most notable providers – Snowflake, Redshift, BigQuery – operating in the cloud. Elsewhere, Databricks and their “data lakehouse” combine elements of data warehouses and data lakes.

But the primary aim remains the same: data, analysis, and potentially AI, in one (or just a few) places. Of course, more data also means a pressing need for more/better ways to handle, organize, and display these large data sets in a way that’s easily digestible.

Keenly aware of that need, modern business intelligence tools (Tableau, Domo, Zoho Analytics, etc.) are increasingly prioritizing the importance of dashboarding to more easily manage and track large volumes of information to enable data-driven decisions.

4. Processing data variety is easier

With larger volumes of data comes, typically, more disparate sources of data. Managing all these different formats, along with obtaining any sort of consistency, is virtually impossible to do manually…unless you have a very large team that’s fond of thankless tasks.

Tools like Fivetran come equipped with 160+ data source connectors, from marketing analytics to finance and ops analytics. Data can be pulled from hundreds of sources, and prebuilt (or custom) transformations applied, to create reliable data pipelines.

Similarly, Snowflake has partnered with services like Qubole (a cloud big data-as-a-service company) to build ML and AI capabilities into their data platform: with the right training data, ‘X’ data being imported will result in ‘Y’ happening within Snowflake.

Fortunately, the emphasis in big data analytics is currently very much on finding ways to collate data from different sources and find ways to use it together rather than trying to force consistency before data is loaded where it needs to be.

5. Democratization and decentralization of data

For many years, business analysts and executives have had to turn to in-house data scientists when they needed to extract and analyze data. Things are very different in 2022, with services and tools that enable non-technical audiences to engage with data.

We’re seeing more emphasis on analytics engineering, with tools like dbt focused on “modeling data in a way that empower end users to answer their own questions.” In other words, enabling stakeholders rather than analyzing or modeling projections for them.

Plus, there’s lots of talk about a more visual approach – modern business intelligence tools like Tableau, Mode, and Looker all talk about visual exploration, dashboards, and best practices on their websites. The movement to democratize data is well and truly underway.

No-code solutions

As their name suggests, no-code tools rework an existing process to take away any coding knowledge that may previously have been required. On the consumer side we’ve seen products like Squarespace and Webflow do exactly that, but tools like Obviously AI are shaking up the big data analytics space in a similar way.

The biggest advantage of no-code (and low code) tools is that they enable stakeholders to get to grips with data without having to pester the data team. This not only frees up data scientists to work on more intensive activities, but also encourages data-driven decisions throughout the company because engaging with data is something that everyone is now capable of.

Microservices / data marketplaces

The use of microservices architecture breaks down monolithic applications into smaller, independently deployable services. In addition to simplifying deployment of these services, it also makes it easier to extract relevant information from them. This data can be remixed and reassembled to generate or map out different scenarios as needed.

That can also be useful for identifying a gap (or gaps) in the data you’re trying to work with. Once you’ve done that, you can use a data marketplace to fill in those gaps, or augment the information you’ve already collected, so you can get back to making data-driven decisions.

Data mesh

The aim of using a data mesh is to break down a monolithic data lake, decentralizing core components into distributed data products that can be owned independently by cross-functional teams.

By empowering these teams to maintain and analyze their own data, they get control over information relevant to their area of the business. Data is no longer the exclusive property of one specific team, but something that everyone contributes value to.

6. Leveraging GenAI and RAG

We’re entering a transformative era in big data analytics as two emerging trends – generative AI (GenAI), retrieval-augmented generation (RAG), and agents – gain traction.

GenAI is particularly exciting. It pushes the boundaries of traditional data analysis, allowing us to generate synthetic datasets and automate content creation. This innovation opens up new avenues for predictive analytics and data visualization, which were previously limited by the scale and scope of manually gathered datasets. As data engineers, our role is evolving from merely managing data flows to actively participating in the generation of data that can provide deeper insights and foster innovation in various business domains.

RAG and AI agents, on the other hand, present a unique challenges and opportunities. They enhance AI models by augmenting them with real-time data retrieval (RAG) or the ability to automate tasks using tools (agents).Their integration into our data systems requires agent observability as well as a sophisticated understanding of how to efficiently orchestrate data flows and ensure the seamless retrieval of relevant information. This necessitates an advanced skill set in data pipeline architecture, focusing on agility and accuracy, to support the dynamic nature of agentic systems.

7. The rise of data products and analytics monetization

The shift from viewing analytics as a cost center to a revenue generator is fundamentally changing how organizations approach their data strategies. Forward-thinking companies are now packaging their analytics outputs as distinct “data products” complete with dedicated data product managers, service-level agreements (SLAs), and clear value propositions.

This productization of analytics isn’t just an internal exercise. Many enterprises are discovering that the insights they’ve developed for internal use can be monetized externally. Financial institutions are selling market intelligence APIs, retailers are offering consumer behavior insights to CPG companies, and logistics firms are monetizing their supply chain analytics.

The product mindset is reshaping analytics teams themselves. Instead of operating as service desks responding to ad-hoc requests, analytics teams are now structured like product teams with clear ownership, roadmaps, and success metrics. Data engineers and analysts are collaborating more closely with product managers and customer success teams to ensure their data products deliver measurable value.

This trend signals a future where internal analytics capabilities become external revenue streams, and data truly becomes a business asset rather than just a byproduct of operations.

8. Building composable and modular analytics architectures

The era of monolithic, all-in-one analytics platforms is giving way to a more flexible, composable approach. Organizations are increasingly building their analytics infrastructure by selecting best-of-breed tools for each component and connecting them through open standards and APIs.

This modular approach offers unprecedented agility. Organizations can swap out data orchestration tools, upgrade visualization capabilities, or replace transformation layers without rebuilding entire pipelines. This flexibility proves particularly valuable as new technologies emerge and business needs evolve.

The open-source community drives much of this composability trend through tools that create common standards enabling interoperability. Meanwhile, cloud providers embrace this trend with services that help organizations manage heterogeneous data environments.

The business impact proves significant through reduced vendor lock-in, faster adoption of new technologies, and the ability to optimize costs by choosing the right tool for each job. This approach allows organizations to build analytics stacks that combine dozens of specialized tools into cohesive, high-performing solutions.

9. The growing importance of responsible AI and ethics

As AI and machine learning become integral to big data analytics, the imperative for responsible, ethical data practices has moved from nice-to-have to business-critical. Regulatory pressures combined with high-profile cases of algorithmic bias are forcing organizations to examine their analytics practices.

Leading companies implement comprehensive AI governance frameworks that include regular bias audits, model explainability requirements, and diverse review boards. New tools make it easier to detect and mitigate bias in data models, while data observability platforms expand their capabilities to monitor not just data quality but also fairness metrics and model drift.

The focus on responsible AI encompasses more than technical solutions. Organizations invest in training programs to help data teams understand the ethical implications of their work, establish clear guidelines for data collection and use, and implement transparency measures that allow stakeholders to understand how AI-driven decisions are made.

This trend toward accountability reshapes how analytics teams design, deploy, and monitor their solutions, ensuring that advanced analytics serve all stakeholders fairly and transparently.

10. Cross-functional collaboration is transforming analytics teams

The traditional siloed approach to analytics is rapidly becoming obsolete. The future belongs to cross-functional teams where data engineers, analysts, domain experts, and business stakeholders collaborate throughout the analytics lifecycle.

This collaborative approach finds support in new tools and platforms designed for data sharing and joint analysis. Modern platforms support everything from exploratory analysis to production-ready data pipelines, all within environments that promote transparency and knowledge sharing.

The cultural shift proves equally important. Organizations break down barriers between technical and business roles, encouraging data literacy across all functions while helping data professionals develop a stronger business acumen. Some companies embed data engineers and analysts directly within business units, creating pods that can move quickly from insight to action.

The impact on analytics outcomes has been dramatic. When marketing analysts work directly with campaign managers, data engineers collaborate with product teams, and finance experts partner with data scientists, the result is faster, more relevant insights that directly address business needs. This collaborative model creates cultures where data partnership becomes the norm rather than the exception.

Be ready for the future of big data analytics

The ten trends we’ve explored represent more than incremental improvements to existing analytics practices. They signal a fundamental shift in how organizations create value from data. From real-time processing and ethical AI to composable architectures and cross-functional collaboration, these developments are redefining what’s possible in the analytics space.

What’s particularly encouraging is that these capabilities are no longer reserved for tech giants with unlimited budgets. Cloud platforms, open-source tools, and no-code solutions have democratized access to advanced analytics. Small and mid-size companies can now build sophisticated data operations that rival those of much larger competitors.

The organizations that will thrive in this new era are those that recognize analytics as a strategic differentiator rather than a support function. They’re the ones investing in data literacy across all levels, building flexible architectures that can adapt to change, and treating their analytics outputs as valuable products worthy of monetization.

As we look ahead, the pace of innovation shows no signs of slowing. New technologies will emerge, regulations will shift, and business needs will continue to change. But the fundamentals remain constant. Organizations that prioritize data quality, embrace collaboration, and maintain ethical standards while pushing the boundaries of what’s technically possible will find themselves best positioned for whatever comes next.

The future of data analytics belongs to those ready to act on these trends today.

To learn more about how data observability – and other trends in the analytics space – can level up your business, schedule time with the form below.

Our promise: we will show you the product.