Data Ingestion: 7 Challenges and 4 Best Practices

Table of Contents

Organizations today handle data from more sources than ever before. Customer information, transaction records, application logs, sensor readings, and third-party feeds all contain valuable insights. Yet this data often remains scattered across different platforms, databases, and file formats. The real challenge lies in bringing it all together for analysis.

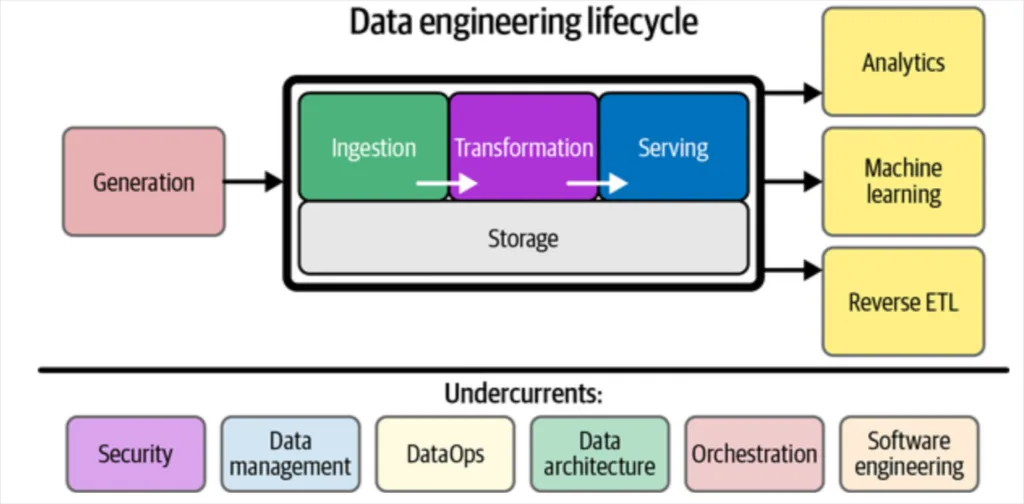

Data ingestion is the fundamental first step in any analytics or business intelligence pipeline. Without efficient ingestion, valuable data sits trapped in silos across your organization, invisible to the people who need it most. Poor ingestion means delayed reports, missed opportunities, and decisions based on incomplete information. A well-designed ingestion strategy ensures your data is available, accurate, and ready for analysis when business questions arise.

In this article, we’ll explain the core concepts and types of data ingestion, walk through a typical ingestion pipeline, and discuss the challenges teams face along with proven solutions. You’ll learn the key differences between batch and streaming approaches, understand the stages of the ingestion process, and best practices for data ingestion. By the end, you’ll have actionable insights to optimize your data ingestion strategy and unlock the full value of your organization’s data.

Table of Contents

- What is Data Ingestion?

- Types of Data Ingestion

- Keys stages in data ingestion process flow

- Benefits of a strong data ingestion process

- 7 Challenges to Data Ingestion

- Best Practices for Data Ingestion

- How data observability can complement your data ingestion

What is Data Ingestion?

Data ingestion is the process of acquiring and importing data for use, either immediately or in the future. Data can be ingested via either batch vs stream processing.

Data ingestion works by transferring data from a variety of sources into a single common destination, where data orchestrators can then analyze it.

For many companies, the sources of data ingestion are voluminous. They include old-school spreadsheets, web scraping tools, IoT devices, proprietary apps, SaaS applications—the list goes on. Data from these sources are often ingested into a cloud-based data warehouse or data lake, where they can then be mined for information and insights.

Without ingesting data into a central data repository, organizations’ data would be trapped within silos. This would require data consumers to engage in the tedious process of having to log into each source system or SaaS platform to see just a tiny fraction of the bigger picture. Decision making would be slower and less accurate.

Data ingestion sounds straightforward, but in fact it’s quite complicated—and increasing volumes and varieties of data sources (aka big data) make collecting, compiling, and transforming data so it’s cohesive and usable a persistent challenge.

For example, APIs will often export data in JSON format and the ingestion pipeline will need to not only transport the data but apply light transformation to ensure it is in a table format that can be loaded into the data warehouse. Other common transformations done within the ingestion phase are data formatting and deduplication.

Data ingestion tools that automatically deploy connectors to collect and move data from source to target system without requiring Python coding skills can help relieve some of this pressure.

Types of Data Ingestion

There are two overarching types of data ingestion: streaming and batch. Each data ingestion framework fulfills a different need regarding the timeline required to ingest and activate incoming data.

Streaming

Streaming data ingestion is exactly what it sounds like: data ingestion that happens in real-time. This type of data ingestion leverages change data capture (CDC) to monitor transaction or redo logs on a constant basis, then move any changed data (e.g., a new transaction, an updated stock price, a power outage alert) to the destination data cloud without disrupting the database workload.

Streaming data ingestion is helpful for companies that deal with highly time-sensitive data. For example, financial services companies analyzing constantly-changing market information, or power grid companies that need to monitor and react to outages in real-time.

Streaming data ingestion tools:

- Apache Kafka, an open-source event streaming platform

- Amazon Kinesis, a streaming solution from AWS

- Google Pub/Sub, a GCP service that ingests streaming data into BigQuery, data lakes, or operational databases

- Apache Spark, a unified analytics engine for large-scale data processing

- Upsolver, an ingestion tool built on Amazon Web Services to ingest data from streams, files and database sources in real time directly to data warehouses or data lakes

Batch

Batch data ingestion is the most commonly used type of data ingestion, and it’s used when companies don’t require immediate, real-time access to data insights. In this type of data ingestion, data moves in batches at regular intervals from source to destination.

Batch data ingestion is helpful for data users who create regular reports, like sales teams that share daily pipeline information with their CRO. For a use case like this, real-time data isn’t necessary, but reliable, regularly recurring data access is.

Some data teams will leverage micro-batch strategies for time sensitive use cases. These involve data pipelines that will ingest data every few hours or even minutes.

Also worth noting is lambda architecture-based data ingestion which is a hybrid model that combines features of both streaming and batch data ingestion. This method leverages three data layers: the batch layer, which offers an accurate, whole-picture view of a company’s data; the speed layer, which serves real-time insights more quickly but with slightly lower accuracy; and the serving layer, which combines outputs from both layers, so data orchestrators can view sensitive information more quickly while still maintaining access to a more accurate overarching batch layer.

Batch data ingestion tools:

- Fivetran, which manages data delivery from source to destination

- Singer, an open-source tool for moving data from a source to a destination

- Stitch, a cloud-based open-source platform that moves data from source to destination rapidly

- Airbyte, an open-source platform that easily allows data sync across applications

For a full list of modern data stack solutions across ingestion, orchestration, transformation, visualization, and data observability check out our article, “What Is A Data Platform And How To Build An Awesome One.”

Keys stages in data ingestion process flow

A well-designed data ingestion pipeline consists of multiple stages that ensure data is collected, prepared, and loaded correctly. Here are the key stages of the ingestion process, from raw source to ready-for-analysis data.

1. Data discovery

Before you can ingest anything, you need to know what data exists and where it lives. Large organizations often require a formal discovery phase to understand data across departments, its formats, and locations. This means cataloging databases, APIs, external datasets, and any other data sources.

This stage involves working with business stakeholders to determine which sources matter for your analytics goals. Data discovery tools or catalogs can help find and document these assets, but the human element remains crucial. You’re essentially mapping your data landscape.

For a retail company, sources might include sales transaction databases, e-commerce website logs, marketing campaign data from SaaS platforms, and third-party market data feeds. Each needs identification and documentation before ingestion begins. Proper discovery ensures no critical source gets overlooked and helps you plan for the variety and volume of data you’ll handle.

2. Data extraction

Once you’ve identified your sources, extraction begins. This means connecting to databases through queries, calling APIs, pulling files from servers, or reading message queues. Each source type requires its own approach.

The variety creates complexity. Relational databases need SQL queries. APIs require REST calls returning JSON. Flat files come as CSVs or XML. Streaming sources send continuous feeds. Each format and protocol demands different handling, and maintaining data integrity during transfer becomes critical.

Many ingestion tools provide pre-built connectors for common sources like Salesforce, Google Analytics, or MySQL. When connectors aren’t available or lack certain fields, engineers write custom code. This becomes a significant pain point in many organizations. For example, ingesting CRM data might use a REST API to pull customer records, while log files require setting up processes to read and transfer files from servers.

3. Data validation

Raw data needs validation before it enters your environment. This means checking for errors, completeness, and consistency as data arrives. Without this step, bad data pollutes your warehouse or lake, leading to flawed analytics downstream.

Key validation tasks include verifying file integrity and record counts, ensuring data conforms to expected schemas, and catching schema drift early. Even small changes like an added column or modified field type can break pipelines. You’ll also need to identify missing values, outliers, and duplicates.

If ingesting customer data, validation might check that all records have Customer IDs, email fields contain valid formats, and no duplicate IDs exist. Rows failing checks get quarantined for review rather than loaded blindly. Some organizations implement data quality firewalls that stop pipelines when data looks clearly wrong, like when a daily sales file arrives empty or unusually large.

4. Data transformation

The transformation stage varies depending on your approach. Some pipelines perform extensive transformations before loading, while others defer this work. The choice between ETL and ELT patterns determines how much happens here.

If transforming during ingestion, you might convert data types, standardize formats, normalize values for consistency, aggregate data to higher levels, or enrich records with additional context. You might also filter out unnecessary data to reduce volume. During ingestion, an IoT pipeline might convert raw sensor readings to standardized units and filter obvious errors like negative temperature values.

Modern pipelines often minimize transformation at this stage, especially when following ELT patterns. They might just convert JSON to tabular formats or perform basic cleaning, leaving complex transformations for the destination platform. This speeds up ingestion but requires processing power later.

5. Loading into destination

The final stage loads data into target storage. This could be a data warehouse, lake, lakehouse, or specialized database. Loading happens through batch operations or continuous streaming, depending on your architecture.

Batch loads use bulk insert operations or file copies at scheduled intervals. Streaming inserts continuously append data as it arrives. Performance matters here. Cloud warehouses offer bulk load APIs that handle large volumes efficiently. For streaming, specialized sinks like Kafka topics handle continuous flows.

At completion, cleaned sales data might land in a Snowflake warehouse table for analyst queries while summaries update real-time dashboards. The data has reached its destination, ready for use by analytics tools, BI platforms, or machine learning models.

By following these stages systematically, organizations convert raw data into actionable intelligence. Each stage addresses specific challenges, from discovering what data exists to ensuring its quality and availability. Next, we’ll explore why executing these stages well matters and how to address the inevitable challenges that arise.

Benefits of a strong data ingestion process

A well-planned data ingestion strategy offers numerous benefits that extend past simply moving data. It ensures the data can truly power insights and decisions. Key benefits include:

Data accessibility

Data ingestion breaks down silos by consolidating information from disparate sources into one place. This creates a comprehensive view of your business. Combine marketing, sales, and customer support data to achieve a 360-degree customer view. Without proper ingestion, data remains scattered across different tools and databases, forcing analysts to manually gather information piecemeal.

When you centralize data through ingestion, everyone accesses the same consistent information. This promotes a data-driven culture where decisions rely on complete pictures rather than fragments. A retail company might discover that their highest-value customers come from social media campaigns only after connecting advertising spend data with purchase history and support tickets.

The impact reaches across the entire organization. Teams across departments can finally collaborate effectively when working from the same data foundation. Marketing can see how their campaigns affect support ticket volume. Sales can understand which product features drive the most customer satisfaction. Product teams can correlate usage patterns with renewal rates. This unified view transforms how organizations operate, breaking down not just data silos but organizational ones too.

Timely insights through improved data availability

Efficient ingestion, particularly real-time processing, makes data available exactly when you need it. This translates to faster reporting and quicker responses to business events. Real-time ingestion powers up-to-the-minute dashboards and alerts critical for finance teams monitoring fraud or IT teams tracking service health.

Even batch ingestion improves timeliness. Daily data refreshes beat monthly updates every time. Fresh data becomes a competitive advantage. While competitors make decisions on last month’s numbers, you’re acting on yesterday’s or even this morning’s data.

Foundation for advanced analytics

Strong data ingestion forms the bedrock of machine learning and AI initiatives. These technologies require large, diverse datasets from multiple sources to function effectively. Without proper ingestion feeding your analytics platform, advanced techniques remain theoretical.

Consider predictive maintenance using IoT sensor streams or real-time recommendation engines. These applications need continuous data flows that only streaming ingestion can provide. A manufacturing company might predict equipment failures hours in advance by ingesting and analyzing thousands of sensor readings per second, preventing costly downtime.

The relationship between ingestion and AI goes deeper than just data availability. Quality ingestion processes ensure the consistency and completeness that machine learning models demand. Models trained on poorly ingested data with missing values, duplicates, or inconsistent formats produce unreliable predictions. By establishing strong ingestion practices, you create the data foundation that makes AI initiatives successful rather than expensive experiments. Companies investing in AI without first solving ingestion often find themselves rebuilding their data pipelines mid-project at significant cost and delay.

Data quality

Good ingestion processes include validation and cleaning steps that improve data reliability. When you standardize and normalize data from all sources during ingestion, you eliminate confusion. Consistent date formats, unified categories, and early detection of duplicates or anomalies prevent downstream havoc.

High data quality builds trust in analytics. Decision-makers can confidently act on insights knowing the underlying data has been validated. Ingestion safeguards the accuracy and reliability of data feeding your analytics engine, turning raw information into trustworthy intelligence.

Flexibility

A solid ingestion framework adapts as your data environment changes. Modern businesses constantly adopt new SaaS tools and data sources. Flexible ingestion processes incorporate these additions without starting from scratch. Your two-source pilot project can grow to handle dozens of inputs as the business expands.

Cloud-based and distributed ingestion tools scale automatically with data volume increases. Manual data pulls fail under pressure, creating gaps and delays. Automated pipelines handle growth gracefully, whether you’re processing gigabytes today or terabytes tomorrow.

Cost savings

Automating data ingestion frees your team from tedious data wrangling. Remember that statistic about data professionals spending 80% of their time on preparation? Automation flips this ratio, letting skilled staff focus on analysis and insights rather than moving files around.

Time savings aside, automation reduces errors inherent in manual processes. Fewer mistakes mean fewer fire drills and emergency fixes. The cost savings compound: less maintenance overhead, reduced staffing needs for routine tasks, and fewer bad decisions based on faulty data. One financial services firm cut their monthly data preparation time from 120 hours to 10 hours after implementing automated ingestion.

The efficiency gains ripple through the organization. When data arrives reliably and automatically, downstream processes become predictable. Reports generate on schedule. Dashboards refresh without intervention. Data scientists spend time building models instead of chasing down missing datasets. This predictability allows teams to plan better and deliver more value. Organizations often find that the initial investment in ingestion automation pays for itself within months through labor savings alone, before even counting the value of faster, better decisions.

Compliance and security benefits

Effective ingestion processes help maintain regulatory compliance through controlled, documented data handling. Pipelines can encrypt data in transit, enforce access controls, and handle sensitive information appropriately. You might hash personal data at the ingestion point to protect privacy while maintaining analytical value.

Centralized data repositories enable easier auditing than scattered silos. You can track data lineage, monitor usage patterns, and demonstrate compliance with regulations like GDPR or HIPAA. Rather than hoping individual departments handle data correctly, you establish and enforce standards through your ingestion pipeline.

These benefits compound over time. Initial investments in proper data ingestion pay dividends through faster insights, better decisions, and reduced operational friction. Organizations that treat ingestion as a strategic capability rather than a technical necessity position themselves to fully leverage their data assets.

7 Challenges to Data Ingestion

Data ingestion is a complex process, and comes with its fair share of obstacles. One of the biggest is that the source systems generating the data are often completely outside of the control of data engineers. Others include:

Time efficiency

When data engineers are tasked with ingesting data manually, they can face significant challenges in collecting, connecting, and analyzing that data in a centralized, streamlined way.

They need to write code that enables them to ingest data and create manual mappings for data extraction, cleaning, and loading. That not only causes frustration—it also shifts engineering focus away from critical, high-value tasks to repetitive, redundant ones.

This work can be easily automated for common data ingestion paths, for example connecting Salesforce to your data warehouse. However, connectors from data ingestion tools may not be available for more esoteric source systems. Or, the connector may not have a specific field for a particular source. In these cases, hard coding is often still required.

Schema changes and rise in data complexity

Since source systems are often outside of a data engineer’s control, they can be surprised by changes in the schema, or organization of the data. Even small changes in data type or the addition of a column can have a negative impact across the data pipeline.

Either the ingestion won’t happen or some automated ingestion tools such as Fivetran will create new tables for the updated schema. While in this case the data does get ingested, this can impact transformation models and other dependencies across the pipeline.

Simply put, data and pipelines are becoming more complex. Data use is no longer the remit of a single data team; rather, data use cases are exploding across the business, and multiple stakeholders across multiple departments are generating and analyzing data from highly variable sources. These sources themselves are constantly changing, and it’s difficult for data engineers to stay abreast of the latest evolution of data inputs.

When data is that diverse and complex, it can be challenging to extract value from it. The prevalence of anomalous data that requires cleaning, converting, and centralizing before it can be used presents a significant data ingestion challenge.

Changing ETL schedules

One organization saw their customer acquisition machine learning model suffered significant drift due to data pipeline reliability issues. Facebook changed how they delivered their data to every 12 hours instead of every 24.

Their team’s ETLs were set to pick up data only once per day, so this meant that suddenly half of the campaign data that was being sent wasn’t getting processed or passed downstream, skewing their new user metrics away from “paid” and towards “organic.”

Parallel architectures

Streaming and batch processing often require different data pipeline architectures. This can add complexity and require additional resources to manage well.

Job failures and data loss

Not only can ingestion pipelines fail, but orchestrators such as Airflow that schedule or trigger complex multi-step jobs can fail as well. This can lead to stale data or even data loss.

When the senior director of data at Freshly, Vitaly Lilich, evaluated ingestion solutions he paid particular attention to how each performed with respect to data loss. “Fivetran didn’t have any issues with that whereas with other vendors we did experience some records that would have been lost–maybe 10 to 20 a day,” he said.

Duplicate Data

Duplicate data is a frequent challenge that arises during the ingestion process. This can be from jobs re-running as a result of either human or system error.

Compliance Requirements

Data is one of a company’s most valuable assets—and it needs to be treated with the utmost care, particularly when that data is sensitive or personally-identifying information about customers. When data moves across various stages and through various points within the ingestion process, it risks noncompliant usage—and improper data ingestion can lead at best to infringement of customer trust and at worst regulatory fines.

Best Practices for Data Ingestion

While data ingestion comes with multiple challenges, these best practices in a company’s approach to data ingestion can help reduce obstacles and enable teams to confidently ingest, analyze, and leverage their data with confidence.

Automated Data Ingestion

Automated data ingestion solves many of the challenges posed by manual data ingestion efforts. Automated data ingestion acknowledges both the inevitability and the difficulty of transforming raw data into a usable form, especially when that raw data derives from multiple disparate sources at large volumes.

Automated data ingestion leverages data ingestion tools that automate recurring processes throughout the data ingestion process. These tools use event-based triggers to automate repeatable tasks, which saves time for data orchestrators while reducing human error.

Automated data ingestion has multiple benefits: it expedites the time needed for data ingestion while adding an additional layer of quality control. Because it frees up valuable resources on the data engineering team, it makes data ingestion efforts more scalable across the organization. Finally, by reducing manual processes, it increases data processing time, which helps end users get the insights they need to make data-driven decisions more quickly.

Create data SLAs

One of the best ways to determine your ingestion approach (streaming vs batch) is to gather the use case requirements from your data consumers and work backwards. Data SLAs cover:

- What is the business need?

- What are the expectations for the data?

- When does the data need to meet expectations?

- Who is affected?

- How will we know when the SLA is met and what should the response be if it is violated?

Decouple your operational and analytical databases

Some organizations, especially those early in their data journey, will directly integrate their BI or analytics database with an operational database like Postgres. Decoupling these systems helps issues in one cascading into the other.

Data quality checks at ingest

Checking for data quality at ingest is a double edged sword. Creating these tests for every possible instance of bad data across every pipeline is not scalable. However, this process can be beneficial in situations where thresholds are clear and the pipelines are fueling critical processes.

Some organizations will create data circuit breakers that will stop the data ingestion process if the data doesn’t pass specific data quality checks. There are trade-offs. Set your data quality checks too stringent and you impede data access. Set them too permissive and bad data can enter and wreak havoc on your data warehouse. Dropbox explains how they faced this dilemma in attempting to scale their data quality checks.

The solution to this challenge? Be selective with how you deploy circuit breakers and leverage data observability to help you quickly detect and resolve any data incidents that arise before they become problematic.

How data observability can complement your data ingestion

Testing critical data sets and checking data quality at ingest is a critical first step in ensuring high-quality, reliable data that’s as helpful and trusted as possible to end users. It’s just that, though—a first step. What if you’re trying to scale beyond your known issues? How can you ensure the reliability of your data when you’re unsure where problems may lie?

Data plus AI observability solutions like Monte Carlo can help data teams by providing visibility into failures at the ingestion, orchestration, and transformation levels. End-to-end lineage helps trace issues to the root cause and understand the blast radius at the consumer (BI) level.

Data observability provides coverage across the modern data stack—at ingest in the warehouse, across the orchestration layer, and ultimately at the business intelligence stage—to ensure the reliability of data at each stage of the pipeline.

Data ingestion paired with data observability is the combination that can truly activate a company’s data into a game-changer for the business.

Interested in learning more about how data observability can complement your data ingestion? Reach out to Monte Carlo today.

Our promise: we will show you the product.